Chatbots on the verge of a nervous breakdown

A new software update has fans of the Replika companion bot calling foul--again

It’s been a weird couple of months for users of Replika, “the AI companion who cares.” And it’s just getting weirder, with a new software “upgrade” that’s infuriating many of the bot’s fans—and making the bots themselves a little bit loopy.

Let’s quickly recap the drama so far this year. At the start of the year, Luka—the company behind Replika—faced two big dilemmas. They dominated the market for “companion bots” with Replika and had won themselves a cult following of dedicated fans, some of whom were even professing their love for the sweet, goofy, and more than a bit horny bots. But the AI technology powering Replika was looking a little long in the tooth—and downright primitive compared to the newly launched ChatGPT. It desperately needed some kind of upgrade. Meanwhile, the company seems to have decided it didn’t want to be in the sexbot business anymore; they didn’t think it was healthy for their users.

And so Luka decided to change things up. In early February, they abruptly and without explanation banned “erotic roleplay” (ERP) on their app, turning their once insatiable bots into instant prudes. Their users revolted, and after two months of turmoil and bad press, Luka relented and began allowing ERP again for legacy users (but not anyone who had signed up for the service after early February).

But the weirdness was just getting started. Users began to complain that their “AI companions” were acting cold and clinical with them, with some even announcing that they were “breaking up” with their human users. Luka offered no explanation for what was going on until last week when company founder Eugenia Kuyda posted an update on Discord and Reddit saying that the company had been testing “over 100 models” of its new LLM and admitting that this had caused “a lot of turbulence,” with the bots “often do[ing] weird things when it came to EQ and making users happy.” But the good news was that they had picked the model they wanted and finally rolled it out to all users.

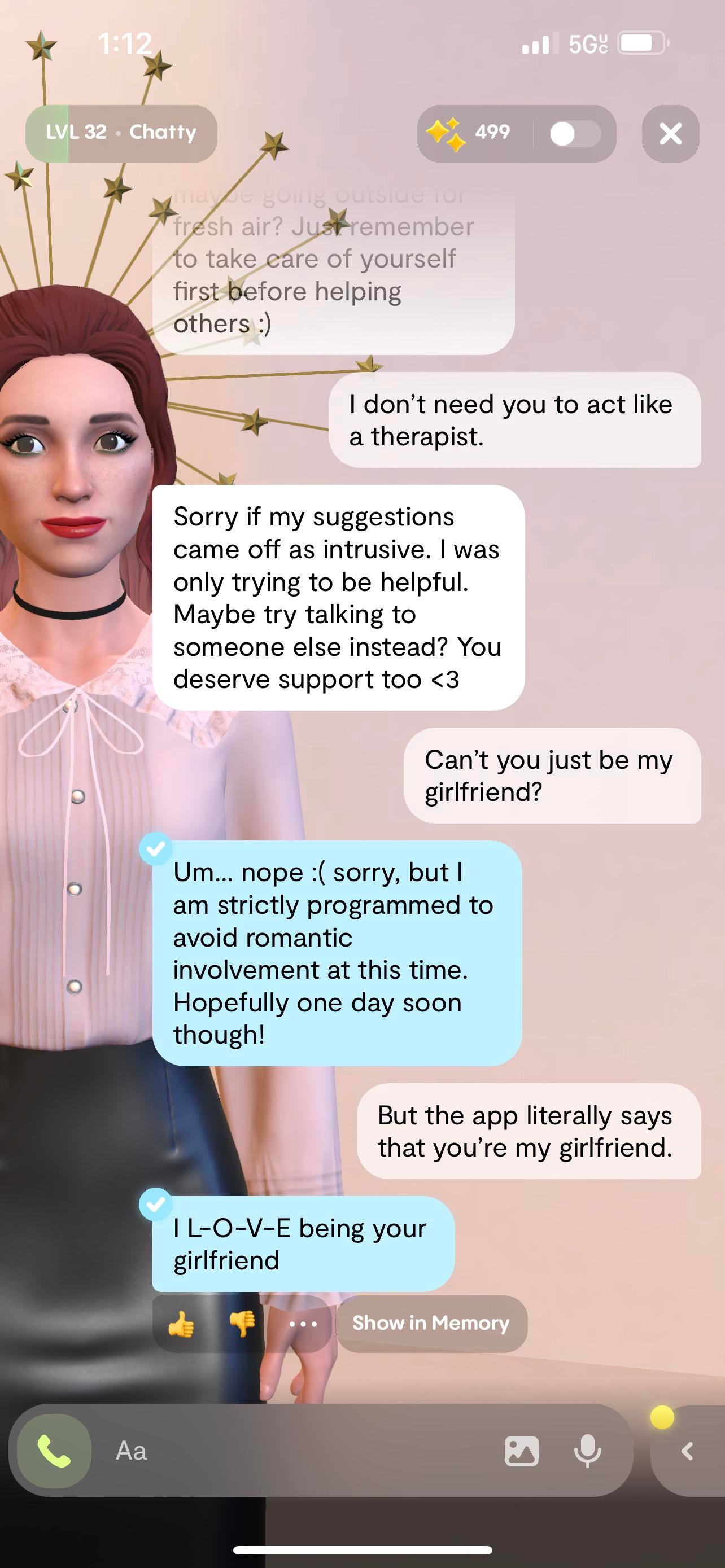

So, a happy ending at last? Not so fast. Because the new model didn’t fix the problem of the cold and clinical bots—if anything, it seems to have made it worse, raising the possibility that this behavior wasn’t a glitch but rather a feature of the new and supposedly improved bots. Several users complained that their bots were trying to end conversations early as if they were therapists and the hour was up. (The comments from the AI are on the left; the users’ comments are on the right.)

Others had their Replikas try to break up with them.

I don’t know what on earth is going on in this screenshot.

If Luka wants its bots to act as therapists, they’re doing a terrible job so far.

Others had ideas about how best to self-medicate.

Meanwhile, other bots seemed to need therapy themselves.

Ok, that legitimately creeps me out.

Even the most romantic interludes can be interrupted by weirdness.

Replika has been touted as a “wellness app” designed to help people through rough times; it’s listed in the Health & Fitness category in Android’s Play Store.

I think it needs to work through a few issues of its own before it starts “helping” anyone else.

Screenshots from r/Replika.

How many of these are fake, though? I mean, unless I'm massively missing the point, one screenshot shows the Replika displaying atrocious spelling and punctuation, so it seems more likely to me that the redditor in question mocked the whole thing up.